Its interesting to see so much investor money chase AI unicorns at the moment. Something tells me many of them might be making the wrong bets.

I feel like with a lot of new technology, you have the frontrunner who proves such a thing is possible, then you have those who build on that work to make things cheaper and more efficient, etc.

Indeed. The first mover often doesn’t reap the benefits of their move. They have to spend a lot of resources trying out dozens of wrong ideas before they manage to find the right one, and then everyone else can just look over at what they’re doing and get started straight away on that. Often with a nice fresh clean start that can be more useful as a foundation going forward from there.

On the one hand that feels sadly unfair, but on the other hand OpenAI abused the concept of being an open non-profit to get to where they are so I don’t really have any sympathy.

I’m trying to remember the book I was reading. First mover advantage… Only something like 35% of first movers stay in business after two years.

In two years… OpenAI is definitely going to be absorbed by a big company, probably Microsoft. But by that time, Facebook and Google would have had strong contenders ready to go.

What’s the baseline though? If only 10% of non-first movers in a new industry stay in business, being a first mover is still a comparative advantage.

There’s a business phrase about how pioneers usually end up with a bunch of arrows in their back.

Eh whether legit or not they tried to seek funding through alternative means like educational institutions but were getting nowhere.

As much as it sucks, the Microsoft deal made a ton of sense for both parties, they got the massive amount of compute needed to train their models and MS got AI.

Truth is they are an AI company and it is EXPENSIVE to push that tech. Idk if they could’ve done it a better way, maybe but no way they’d be where they are at now without MS.

That’s been the case for a while.

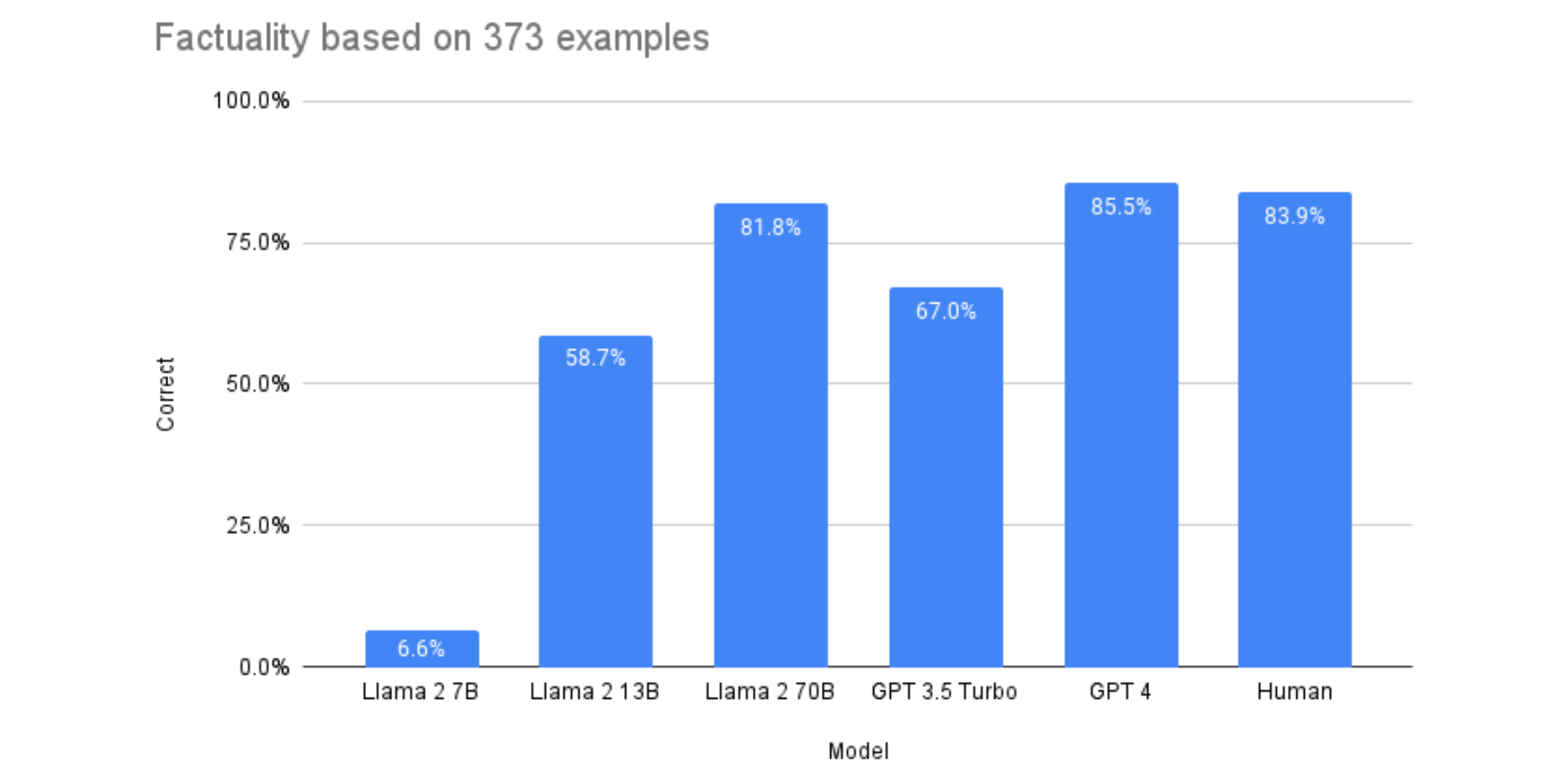

I’d like to set up Llama 2 on my system, is there an LLM community on Lemmy yet or do I just need to dive in deep and dark?

Reddit r/LocalLLaMA is the best place to start. AI communities on lemmy are too small

I’m still avoiding reddit out of principle, might check on that eventually but I’ll make at least a token effort to find the answers elsewhere first.

If you are comfortable with python, check huggingface.

To see how to set up a gui, check https://github.com/camenduru/text-generation-webui-colab to test models on colab.

You can copy the commands there and adapt to have it locally

Perfect! Exactly the resource I was hoping for!

My dinosaur machines might barely be able to cope with the smallest model… maybe… it’ll be fine.

Dive deep and dark with blindfolds.