Hello sailors,

I have been job hunting for a while and I have felt a great disadvantage in my job search due to my lack of access to high-quality LLMs. Writing cover letters is honestly so bullshit. GPT-3 is honestly quite bad nowadays, but as a true pirate at heart I couldn’t quite get myself to cough up the coin for OpenAI’s GPT4 out of principle. I hate them for putting their cutting edge technology behind a paywall, making it inaccessible for their own gain. I feel like this is not what the internet was supposed to be. So today, call me the great emancipator cuz i’m teaching u how to get that shi for free baby

Requirements: Docker

It’s all gonna be based off of this github repo: gpt4free

Installing through docker (there’s also a way to install with Python PIP if that’s more convenient for you. The docker worked for me though)

-

docker pull hlohaus789/g4f -

docker run -p 8080:8080 -p 1337:1337 -p 7900:7900 --shm-size="2g" -v ${PWD}/hardir:/app/hardir hlohaus789/g4f:latest -

Open up the webui in your browser at localhost:8080

-

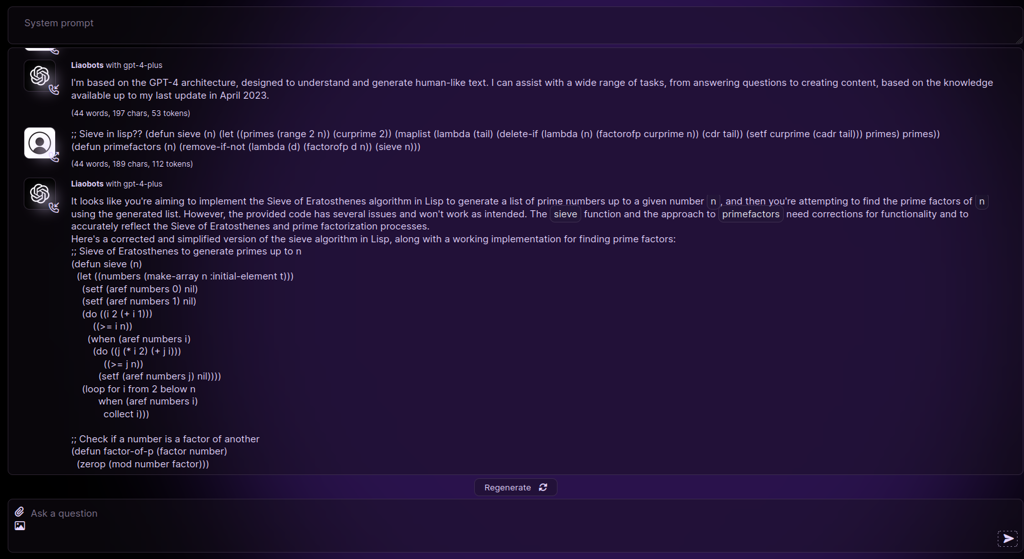

In the “Provider” dropdown in the bottom look for “Liaobots”

-

Choose “gpt-4-plus” under the “Models” dropdown

??? Profit

The cool thing about gpt4free is that there’s a lot of providers and a lot of models to choose from! So if gpt-4-plus from Liaobot doesn’t work for you you can switch to something else easily. Do note that some models require you to provide an authentication token or be logged in. Most of them work right out of the box tho.

*this post was not made with any use of an llm I promise ;)

^^list of gpt4 providers

I see that it uses a local model. How good is it really? How fast are the responses in consumer grade gpus?

It let’s you pick from a whole bunch of local models to download, some trained by Microsoft and the like. In my experience it’s pretty responsive on a 2070s ive had for years now, but the responses aren’t as good as something like gpt4. Probably about on par with gpt3 in most cases if you choose a larger model.

LMStudio is excellent for downloading and running local models. Performance and quality are vastly improved since the first versions of LLMs came out. I get near instant responses on my laptop with only a GTX1650 in it.

With that said, they still fail to produce much of value. YMMV