Any tool can be a hammer if you use it wrong enough.

A good hammer is designed to be a hammer and only used like a hammer.

If you have a fancy new hammer, everything looks like a nail.

I am really piss off when Reddit use AI to shadowbanned my account. They never tell the reason. They just hide my interaction to outerworld, assuming that i am dumb and never found out.

As a result, all subsequent accounts i try to create are shadowbanned after 5 minutes because my phone in blacklist.

Appeals everyday but never get responded. I am sure all of this is handling by AI.

I wouldn’t even call this AI, they just have an algorithm. That’s poorly tuned.

I encountered the same issue, and that’s exactly why I’m here on Lemmy. Reddit and it’s Shadow banning habits have to go away.

I don’t like having persistent social accounts, so I make a new one for each topic, Reddit, GitHub. Purpose specific accounts to do one thing. And for the last few years, every time I create an account like that, it’s immediately shadow banned. It’s frustrating, because my contributions are now thrown away, and it’s dishonest, because these services don’t have the politeness to even tell you you’re not allowed to participate.

Because of that, a federated system like Lemmy must survive. That’s why we’re here

You wouldn’t call it AI. But you can bet the C suite sure calls it AI.

Conways game of life is ai :)

You’re right! If Conway’s game of life wasn’t AI a year ago, it sure as hell is now!

I wonder how many rubes I can sell it to…?

First make it Conway’s as a service, then get at least a $3 billion dollar valuation, get your seed round in. And then sell it before you actually have to deliver any revenue.

It’s perfect

Some hammers use enough energy to power a small country in order to show you a cake recipe without an entire backstory and 50 ads.

Without ads for now

A cake recipe that instructs you to put non-toxic glue in it, and some small pebbles on top of it.

Then it WAS worth it.

This hammer does the same without using the energy of a small country https://theskullery.net

Sometimes it is more like “AI is like a hammer in a world full of screws.”

Welcome to capitalism.

AI is the new thing, so cramming it into a product increase funding and/or stock price.

Even if it hurts the product.

Even if it hurts the product

Because the product is not the product. The stock valuation is the product.

deleted by creator

Probably just as difficult as justifying the “AI” component of this light: https://amzn.eu/d/08yAcZpp

Nope. It’s more like that weird thing you brought at 3 am off of the Home Shopping Network because you were in a really bad place and thought it would make you feel better.

Now it’s taking up space and you don’t want to throw it out because that would mean you’re a failure…

It’s more like Jefferson’s dumbwaiter, in that it was created by someone who verbally supported an egalitarian utopian vision of society, but the device itself is a scale model of an exploitative social system. At one station of the device, unpaid/low-paid labor operates out of view of the user, and then at the other station, the user enjoys an almost-magical appearance of an answer to their request.

No tool is “just a tool”, after all. In that way, AI is like a hammer.

(That section of the video leans heavily on Do Artifacts Have Politics?, which is a pretty short and accessible essay. If you’re not convinced that artifacts do have politics, and you don’t want to watch the video, just read a few paragraphs of the essay.)

It’s not good at replacing your job, but good at convincing your boss that it can

Basically what I said to people who asked me about my opinion on AI.

Exactly it was: “AI is a tool like a hammer. If you hit your finger, don’t complain about the tool, but because you simply used it wrong.”

Except AI is a pipe wrench pretending to be a hammer.

True.

And I get where you’re going, but pipe wrenches are still way too useful in too many situations. AI is a like a disc brake compressor hand tool, being sold as the solution to everything else.

When I mention how much I like it for compressing a disc brake, I feel like people look at me like I’m crazy for falling off the hype train.

Edit: And by people, I mean AI hype shill bots, probably.

Yes, but your disk brake compressor tool could also be a hammer.

calling (mm)LLMs AI is just corpo bullshit. But hey, it’s fancy, right?

Complaining that it’s called AI is like complaining that smartphones are called smart. There’s no stopping it, you just end up sounding like an old man yelling at the cloud. (Which isn’t really a cloud, but we still call it that)

Nah, smartphones being actually “smarter” than feature phones as in you can do way more than just basic stuff like calling, messaging people, run a simple calculation, having a calendar etc.

you not liking it doesn’t make it any less ai. I don’t remember that many people complaining when we called the code controlling video game characters ai.

Or called our mobile phones “cell phones”, despite not being organic. Tsk.

Except cell phones or cellular phones refer to the structure a mobile network is built on: a mesh of cell towers.

deleted by creator

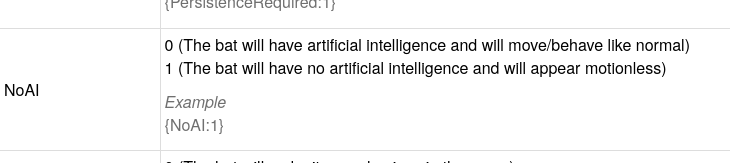

provably wrong. https://www.digminecraft.com/data_tags/bat.php look at the nbt tags, specifically the description of the no AI nbt tag

I have no idea what you just said.

I showed you proof that AI is sometimes used to mean artificial intelligence when describing code that controls video game enemies.

deleted by creator

In video games, artificial intelligence (AI) is used to generate responsive, adaptive or intelligent behaviors primarily in non-playable characters (NPCs) similar to human-like intelligence. Artificial intelligence has been an integral part of video games since their inception in the 1950s

literally wikipedia https://en.wikipedia.org/wiki/Artificial_intelligence_in_video_games

pretty sure that they were and still are called Bots though, atleast in the context of first person shooter.

look at the NBT tags for bats for example. it means artificial intelligence.

next thing you gonna say that boids are AI too…

just because Mojang decided to name that flag noAI doesn’t mean it uses AI to govern its behavior.

Descriptivism advocates when AI smhingmyheads

Software developer, here.

It’s not actually AI. A large language model is essentially autocomplete on steroids. Very useful in some contexts, but it doesn’t “learn” the way a neural network can. When you’re feeding corrections into, say, ChatGPT, you’re making small, temporary, cached adjustments to its data model, but you’re not actually teaching it anything, because by its nature, it can’t learn.

I’m not trying to diss LLMs, by the way. Like I said, they can be very useful in some contexts. I use Copilot to assist with coding, for example. Don’t want to write a bunch of boilerplate code? Copilot is excellent for speeding that process up.

LLMs are part of AI, which is a fairly large research domain of math/info, including machine learning among other. God, even linear regression can be classified as AI : that term is reeeally large

I mean, I guess the way people use the term “AI” these days, sure, but we’re really beating all specificity out of the term.

This is a domain research domain that contain statistic methods and knowledge modeling among other. That’s not new, but the fact that this is marketed like that everywhere is new

AI is really not a specific term. You may refer as global AI, and I suspect that’s what you refer to when you say AI?

it’s always been this broad, and that’s a good thing. if you want to talk about AGI then say AGI.

I know that they’re “autocorrect on steroids” and what that means, I don’t see how that makes it any less ai. I’m not saying that LLMs have that magic sauce that is needed to be considered truly “intelligent”, I’m saying that ai doesn’t need any magic sauce to be ai. the code controlling bats in Minecraft is called ai, and no one complained about that.

Very useful in some contexts, but it doesn’t “learn” the way a neural network can. When you’re feeding corrections into, say, ChatGPT, you’re making small, temporary, cached adjustments to its data model, but you’re not actually teaching it anything, because by its nature, it can’t learn.

But that’s true of all (most ?) neural networks ? Are you saying Neural Networks are not AI and that they can’t learn ?

NNs don’t retrain while they are being used, they are trained once then they cannot learn new behaviour or correct existing behaviour. If you want to make them better you need to run them a bunch of times, collect and annotate good/bad runs, then re-train them from scratch (or fine-tune them) with this new data. Just like LLMs because LLMs are neural networks.

They are neural networks which are some of the oldest AI tech we have.

You can hate them, but they are by definition AI.

uhm no, AI is relaying on neural networks (which are just weighted gates) but Neural Networks are not by definition AI.

I’m sorry but they absolutely are.

sorry, but I disagree.

I don’t see the “is not actual AI” argument.

Since the 80 AI has just been algorithms and proposals for neural networks.

It never has need to have a “soul” or “be sentient” to be Artificial Intelligence.

Even a simple Tic Tac Toc opponent algorithm has been called AI without much complaining about it.

Also AI didn’t got called AI by corporations. That naming for the technology dates from where it was being proposed as concepts in universities.